When building embedded systems, there are typically at least a few specialized tools required. This includes things like the build tool, cross-compiler, unit test tools or documentation generators.

Getting all of these things set up on your machine can be tedious. And then when it's time to bring someone else on to the project, setting up the build environment just takes extra time.

In the past, I've written about using Vagrant to define build environments as code. That solution works well, but the environments that are created are full virtual machines and require quite a bit of disk space and time to build.

A lighter-weight and simpler approach is to use Docker.

Hint: If you can't make it through all of this below, check out the example over at Github.

Intro to Docker

Docker creates containers instead of full-blown, virtual machines. These are similar to virtual machines in that they provide isolated computing environments, but they are much lighter-weight (in resources) because each container shares common components with the Docker installation.

Docker has many, many features but to create a build environment we only care are about a few of them.

First, there is a build step that is used to create an image from a Dockerfile. An image is just like it sounds — it has an OS and a bunch of installed applications (in our case, the ones you need to build your app). The Dockerfile is the recipe for building the image. You only need to rebuild the image if you want to change the installed applications or its configuration in some way.

Once the image is built, you can then run the image — to create a container — and execute whatever commands you want in it. This is how you can execute your builds from inside the environment in the container. You don't need to rebuild the image each time you want to run.

With Docker, there is also a way to share files between the host (your computer) and the containers. This is used to give your build environment access to your code, and to get any build outputs out of the container.

Installing Docker

Of course you'll need to install Docker for this all to work. You can do this on Windows, Linux or MacOS. Detailed instructions for installation (and much more!) are in the Docker documentation.

Define the environment

The first thing to do is to define your build environment with a Dockerfile. Here's an example:

FROM ubuntu:18.04

WORKDIR /app

RUN apt-get update

RUN apt-get install -y gcc ruby

RUN gem install ceedlingThe FROM command tells Docker what image to start with. Ubuntu is a really good choice for Linux-based build environments (these shared images are stored in Docker Hub — and there are lots of them).

WORKDIR specifies that the working directory (for subsequent commands) will be in the container in a new folder called /app. This is a handy way to keep our files separate from the others in the container.

Finally, the RUN commands specify commands to be run at build time. This is a bit confusing, because these are not run when "running" the image, only when building. But this is how we run commands to install packages on the system. In this case I'm installing the dependencies necessary to compile a C application and unit test it with Ceedling.

You'd want to install whatever is required by your build environment here. In addition to using the apt package manager, you can do all kinds of things like installing from tarballs or pulling directly from a source code repository.

To use this Dockerfile to build an image, place it into your project file and run:

$ docker build -t my-environment .This will build a Docker image named my-environment from the Dockerfile in the current directory.

Running commands

Once an image has been built, the image can be run with the run command:

$ docker run my-environmentWhat does this do? Well nothing really, because we haven't told it to do anything. One neat thing about the run command though is that any arguments tacked on to the end of the command are passed to and executed in the container:

$ docker run my-environment pwd

/appIf we tell the container to execute pwd, it tells us that the current directory is /app(remember the WORKDIR from before?).

This is how we can use the container to run make or gcc or ceedling or whatever.

Share the files

In order to share files between the host and the container, we need to set up a bind mount. This is done each time the run command is executed with the --mount option. The goal is to mount the current host directory to the WORKDIR (/app in this case) in the container.

Here is a Linux version using pwd to get the current host directory:

$ docker run --mount type=bind,source="$(pwd)",target=/app my-environmentAnd here is a Windows version using cd to get the current host directory:

> docker run --mount type=bind,source="%cd%",target=/app my-environmentYou can test this by passing an ls command to be run in the container. It should show you the contents of your host files:

$ docker run --mount type=bind,source="$(pwd)",target=/app my-environment

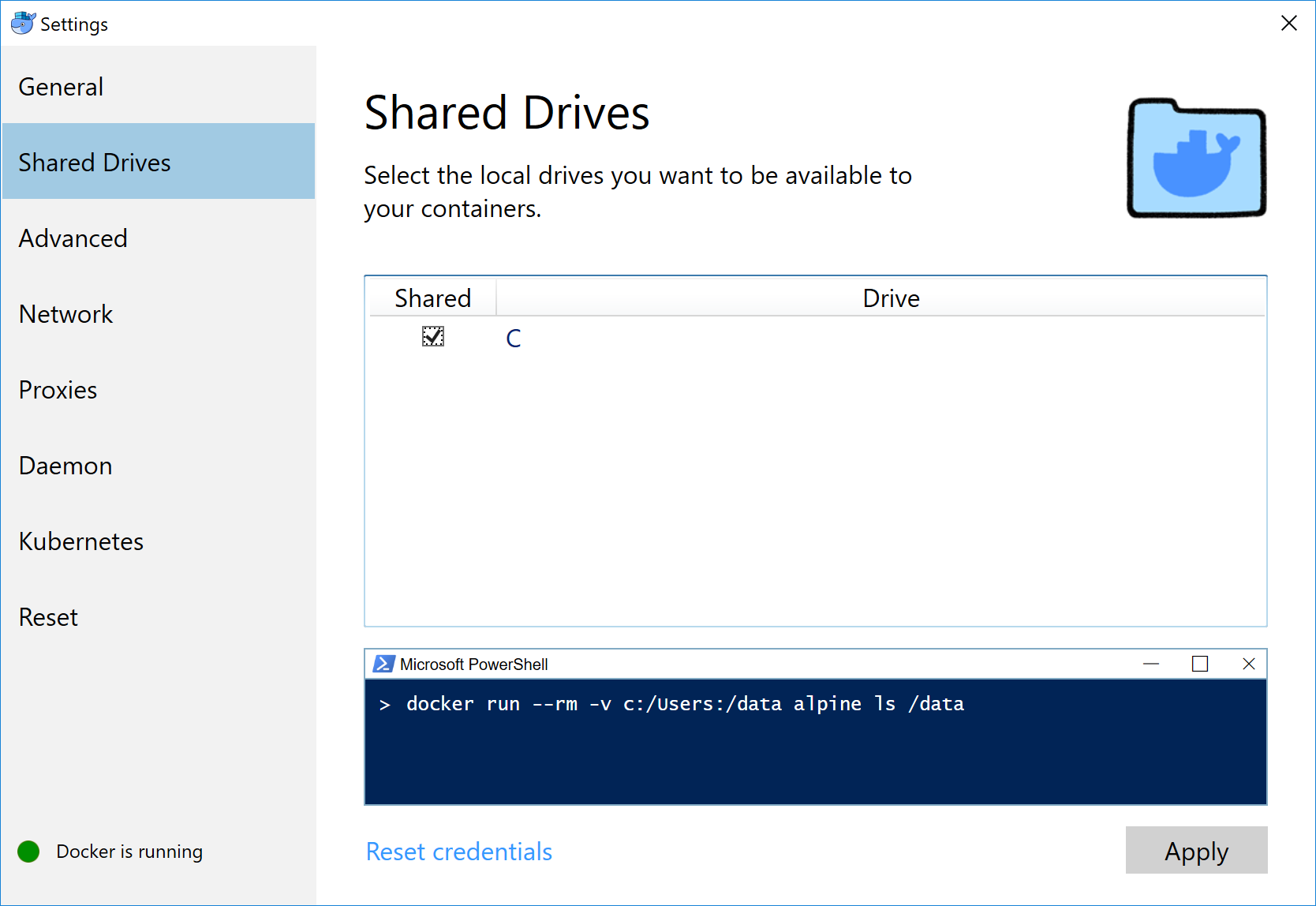

< your host files listed here >Note: You may need to enable sharing of your host drive from within Docker. On Windows it looks like this:

A handy Windows script

Isn't this neat! Well it is, but it's a lot to type at the command line. It would be great to wrap this all up in a script that:

- Builds the Docker image if necessary.

- Runs the Docker image with whatever commands we give it.

- Automatically sets up the bind mount to share files.

Here is a simple batch script that will do this on Windows:

@echo off

REM Get a unique image name based on the name of this folder.

set container_name=%cd%

set container_name=%container_name::=%

set container_name=%container_name:\=_%

REM Build the image if it doesn't exist.

docker image ls | findstr "\<%container_name%\>" >nul

if /I "%ERRORLEVEL%" NEQ "0" (

docker build -t %container_name% .

)

REM Run the provided command in the environment.

docker run --mount type=bind,source="%cd%",target=/app %container_name% %args%This script creates a unique container/image name based on the current directory and builds the image it if it doesn't exist. If the image does exist, it isn't rebuilt.

You can pass any commands to this script and they will be run in the container.

So if we name this script ev.bat and have the Dockerfile (defined above) in the same folder, we can run commands in the container like this:

> ev ceedling help

Sending build context to Docker daemon 262.7kB

Step 1/5 : FROM ubuntu:18.04

---> 3556258649b2

Step 2/5 : WORKDIR /app

---> Using cache

---> fbe45d2689fc

Step 3/5 : RUN apt-get update

---> Using cache

---> 63cdb0a9a603

Step 4/5 : RUN apt-get install -y curl ruby gcc

---> Using cache

---> 944af65013fd

Step 5/5 : RUN gem install ceedling

---> Using cache

---> b7ee8cffbd1f

Successfully built b7ee8cffbd1f

Successfully tagged c_dev_ceedling-docker:latest

ceedling clean # Delete all build artifacts and temporary products

ceedling clobber # Delete all generated files (and build artifacts)

ceedling environment # List all configured environment variables

...Since this is the first time we are using the script, it builds the image and executes the ceedling command inside a container. The next time we use the script, the image is already built and so the command is just run inside the existing image:

> ev ceedling help

ceedling clean # Delete all build artifacts and temporary products

ceedling clobber # Delete all generated files (and build artifacts)

ceedling environment # List all configured environment variables

...A Bash version of the script

Here's a Bash version of the same sort of thing:

#!/usr/bin/env bash

# Create a unique container name based on the current folder.

container_name=$(pwd)

container_name="${container_name/\//}"

container_name="${container_name//\//_}"

# Build the Docker image if it doesn't exist.

if ! docker image ls | grep "\b$container_name\b" > /dev/null ; then

docker build -t $ .

fi

# Run the command with the Docker image.

docker run --mount type=bind,source="$(pwd)",target=/app $ "$@"If you put it in a file called ev you can run commands like this:

$ ./ev ceedling help

ceedling clean # Delete all build artifacts and temporary products

ceedling clobber # Delete all generated files (and build artifacts)

ceedling environment # List all configured environment variablesThe thing about this sort of script is that you can put it in your source repository with the Dockerfile and the code, and then anyone who has Docker installed can use the tools available in the container... without having to know anything about Docker.

More features

I've put together a more complete example of using this strategy over on Github. It has a few more features — like allowing you to force a rebuild of the container or to open a terminal directly into the container. Both of these are helpful when you are first setting up the environment for a project.